Replacing Windows NLB with F5 using Ansible

It’s still out there. Windows Network Load Balancing — NLB or WNLB for the abbrevianados — gave Enterprise IT a quick-win for creating high-availability compute clusters, allowing a single IP address to be shared among a number of servers.

The simplicity of NLB meant it proliferated far more than the IPMP of the Unix world, and was often used in place of expensive hardware load balancers (HLB) from ArrowPoint[1], Cisco and F5. Hey, it was free and built into the OS, well played Microsoft.

With the advent of stateless microservice architectures, distributing load across a pool of servers, or containers, has become the bread and butter of free reverse proxy servers like nginx and Apache HAProxy, or cloud services like AWS Application Load Balancer or Azure API Gateway. Yet, we deal in realities at Tidal Migrations, and the reality is that hundreds of NLB clusters exist in our clients data centers — and this needs to change.

Why Drop NLB?

If you are considering a cloud migration, or even a switch hardware upgrade[2], you will want to move off of NLB first. Cloud providers like Microsoft Azure simply don’t support it and it’s preferable to make such configuration changes before a potentially highly-visible cloud migration, especially if you already have a HLB on-premise.

The Solution: PowerShell, ansible and F5

PowerShell provides for programmatic access to your environment’s configuration. Working from spreadsheets or “we shouldn’t haves” is not a reliable way to plan your migration, so get scripting as soon as possible.

We will use PowerShell to interrogate the network, as well as other

scripting languages to manipulate the JSON data that we generate and

use to produce ansible playbooks.

We have chosen F5 for this example as it has been fairly pervasive throughout our client sites.

The following steps are an overview of this migration:

- Inventory:

Create a data file of all NLB configurations - Analyze:

Can we migrate the NLB IP Address to the F5? Do we need to re-IP? - Automate:

Generate ansible playbooks for each NLB required - Execute:

ansible-playbook -i f5 nlb_clusters.yml

1. Inventory

Thankfully, NLB configurations can be gleaned from PowerShell and coerced into a friendly data format such as JSON:

Note: One of the gotchas you need to look out for is ports in use. If these are not configured explicitly, and you have a range of ports being load balanced, say 1–65536, you will need to look a bit deeper at IIS bindings etc. and find exactly what ports you need to balance.

2: Analyze

Now that you have a good idea of every NLB instance in your environment, you can look at which networks are impacted. Are these available on your F5? If not, you will need to allocate new IPs to use as Virtual Servers on the F5. This isn’t too bad if you are using DNS for all of your NLB IPs.

Hint: Now that you have your NLB IPs, perform a DNS Impact Assessment using dnstools.ninja — for NLB IPs without corresponding DNS entries, you’ll have a hard-coded IP issue. You are not alone.

If you are allocating new IPs, write some code to allocate new IPs from your IPAM tool.

3. Automate

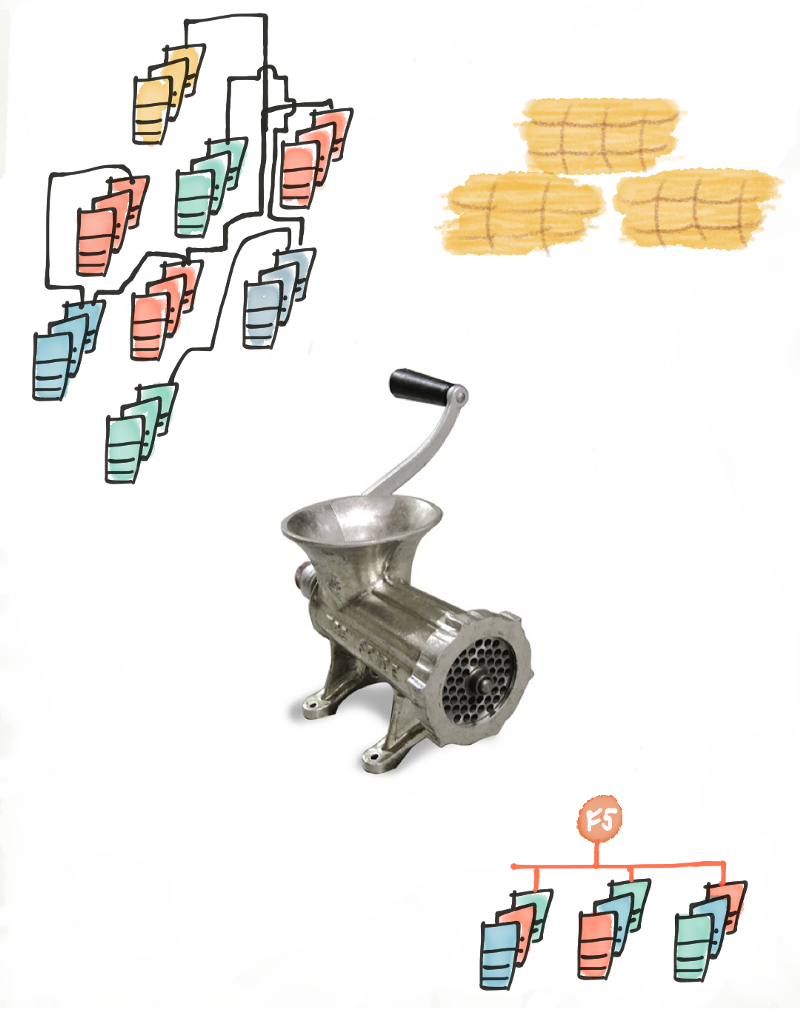

Prepare the meat grinder!

Here we take the existing NLB config, the new IPs (if applicable), and generate a series of ansible playbooks to both implement and destroy the required Virtual Server, Pools and Nodes on the F5.

Ansible provides us with a tried and tested toolkit for this. With fantastic support from F5 (thanks Steve!) and a declarative, human-readable syntax you really can’t go wrong. We check these into version control and share with the project team and application owners ahead of implementation to validate that all the snowflakes were taken care of just so.

Here’s an example playbook for creating one NLB cluster replacement, with three port listeners:

4. Execute

Executing the migration is as simple as executing the implementation playbooks, then in the case of new cluster IPs, cutting over DNS.

ansible-playbook -i f5 nlb\_cluster.yml

If you are lucky enough to be able to move the NLB IP to the F5, follow these steps:

- Disable the NLB feature on your windows servers

- Execute the playbook to configure the F5

- Flush the arp cache on your switches, or be patient.

I hope you found this helpful to your migration project. Comments/edits/nits always welcome.

-David

Chief Migration Hacker, Tidal Migrations

This is just one of the pre-migration remediation services we provide at at Tidal Migrations. Contact us.

1: This wouldn’t be a trip down memory lane without a reference to this category killer! Remember the acquisition?

2: Cisco 9k doesn’t support NLB